AI in the public domain is vastly improving with use in transportation, video surveillance, fraud prevention, and many more areas, so what are the ethical challenges?

Artificial Intelligence (AI) has the potential to change the way we live and work. Embedding AI across all sectors has the potential to create thousands of jobs and drive economic growth. By one estimate, AI’s contribution to the United Kingdom could be as large as 5% of GDP by 2030. A number of public sector organisations are already successfully using AI for tasks ranging from fraud detection, video surveillance and even answering customer queries. The potential uses for AI in public are significant, but have to be balanced with ethical, fairness and safety considerations.

At its core, AI is a research field spanning philosophy, logic, statistics, computer science, mathematics, neuroscience, linguistics, cognitive psychology and economics. AI can be defined as the use of digital technology to create systems capable of performing tasks commonly thought to require intelligence. AI is constantly evolving, but generally it involves machines using statistics to find patterns in large amounts of data and has the ability to perform repetitive tasks with data without the need for constant human guidance.

There are many new concepts used in the field of AI and you may find it useful to refer to a glossary of AI terms. AI guidance mostly discusses machine learning. Machine learning is a subset of AI, and refers to the development of digital systems that improve their performance on a given task over time through experience. Machine learning is the most widely-used form of AI, and has contributed to innovations like selfdriving cars, speech recognition and machine translation. So, what are the most common uses of AI in the public domain, and how can we ensure public safety with ethical use?

DVLA

Each year, 66,000 testers conduct 40 million MOT tests in 23,000 garages across Great Britain. The Driver and Vehicle Standards Agency (DVSA) developed an approach that applies a clustering model to analyse vast amount of testing data, which it then combines with day-to-day operations to develop a continually evolving risk score for garages and their testers. From this the DVSA is able to direct its enforcement officers’ attention to garages or MOT testers who may be either underperforming or committing fraud. By identifying areas of concern in advance, the examiners’ preparation time for enforcement visits has fallen by 50%.

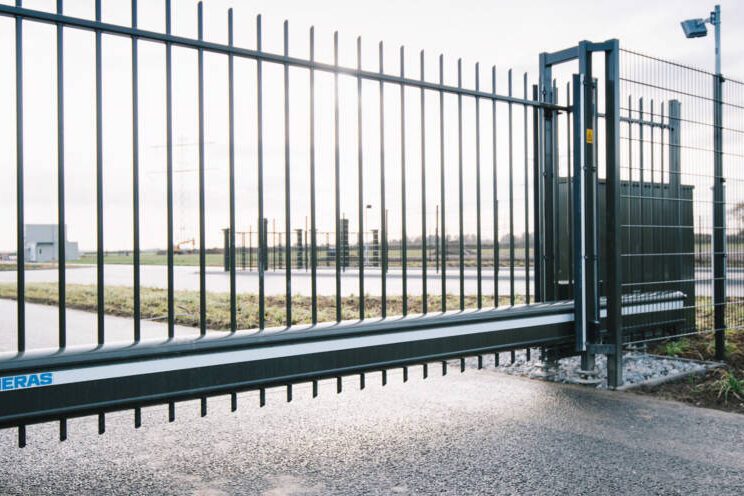

Video surveillance

We usually think of surveillance cameras as digital eyes, watching over us or watching out for us, depending on your view. But really, they’re more like portholes: useful only when someone is looking through them. Sometimes that means a human watching live footage, usually from multiple video feeds. Most surveillance cameras are passive, however. They’re there as a deterrence, or to provide evidence if something goes wrong. Did your car get stolen? Check the CCTV.

But this is changing — and fast. Artificial intelligence is giving surveillance cameras digital brains to match their eyes, letting them analyse live video with no humans…

To read the rest of this feature, check out our latest issue here.

Media contact

Rebecca Morpeth Spayne,

Editor, Security Portfolio

Tel: +44 (0) 1622 823 922